It may come as a surprise, but PwC revealed that 62% of executives still rely more on experience and advice than on data to make business decisions. As a result, they are unable to achieve the speed and sophistication in strategic planning that today’s business landscape demands.

If you’re here, it means you don’t want to operate in the dark. You’re probably in a pressing need to access pertinent, up-to-the-minute information, especially from news sources. This article ponders upon article scraping as one of the effective ways to obtain quality data from the web.

What does news scraping mean?

News scraping is the technological process of extracting and gathering news content from various online sources. To better explain what is data mining articles, let’s consider this.

You’re always on the lookout for the latest news articles and blog posts. Instead of spending hours surfing the web, you’ve got scraping software doing all the heavy lifting. It pinpoints and retrieves specific topics, keywords, or entire articles — just anything you’re interested in.

What can you get from mining articles?

Generally speaking, it’s a good idea to extract articles from web pages. News sources are brimming with public data, reviews, updates on the industry, and many other data points you may find rewarding for your business. These insights allow you to:

- Conduct media monitoring and keep an eye on brand reputation

- Spot trends early to be able to respond to them promptly

- Curate content to generate articles that are interesting for your audience

- Work out an SEO strategy based on extracted keywords from articles

Still, the meaning of web scraping for business is hard to overestimate. Here are the results you can expect to achieve with these activities.

Operate with efficiency

Each day, we create 328.77 million terabytes of data, with articles being a huge part of this. As you manually scrape news, you may find it challenging to rapidly sift through relevant information — both because of the data volume and resources at hand. Saying nothing of real-time news tracking. This seems to be an impossible mission if you’ve got to track hundreds of sources.

Automated data scraping is a sure way to scan and aggregate information from news articles continuously. News scraping tools often allow you to carry out real-time information aggregation, ensuring you receive timely insights.

Also, think of the countless hours you’ll save when you’re not manually searching for and analyzing news data.

💡 Those employees who use automation report that it saves them 3.6 hours a week, which translates into 23 working days per year.

Additionally, manual data research methods often come with the risk of oversight, errors, or bias. News scraping reduces these risks and ensures you get consistent and reliable data sets.

Minimize costs & risks

Every moment spent searching, verifying, and manually analyzing data comes with associated labor costs. A report from Coveo noted that workers waste around 40% of their time each year searching for data. At an average annual salary of $80,000 for a data professional, that’s a potential waste of $32,000 per employee. Text mining news articles automates the flows associated with data collection and analysis, bringing significant savings.

Beyond mere expenses, manual methods inherently may expose your company to potential inaccuracies and time lags. Bad quality data costs organizations $12.9 million on average and leads to poor decision-making, as Gartner suggests. Additionally, a delay in accessing critical news can result in missed opportunities for your business. Automated scraping tools eliminate inaccuracies and help you mitigate risks associated with outdated or inaccurate information.

Predict trend shifts

News scraping is the very tool you need to foresee the tides of change in the business landscape. It enables:

- Real-time monitoring. Stay in touch with real-time happenings as you continuously scrape news articles to spot emerging patterns and quickly react to them.

- Broad spectrum analysis. Pull data from diverse sources — be it mainstream media, niche blogs, or forums — to get a 360-degree view of the market.

- Data-driven forecast. With the collected data at hand, you may consider using analytical tools and models to derive predictive insights. For instance, you can track the rise of specific keywords or the frequency of particular topics to gauge the momentum of a trend.

Get insights about customers’ behavior

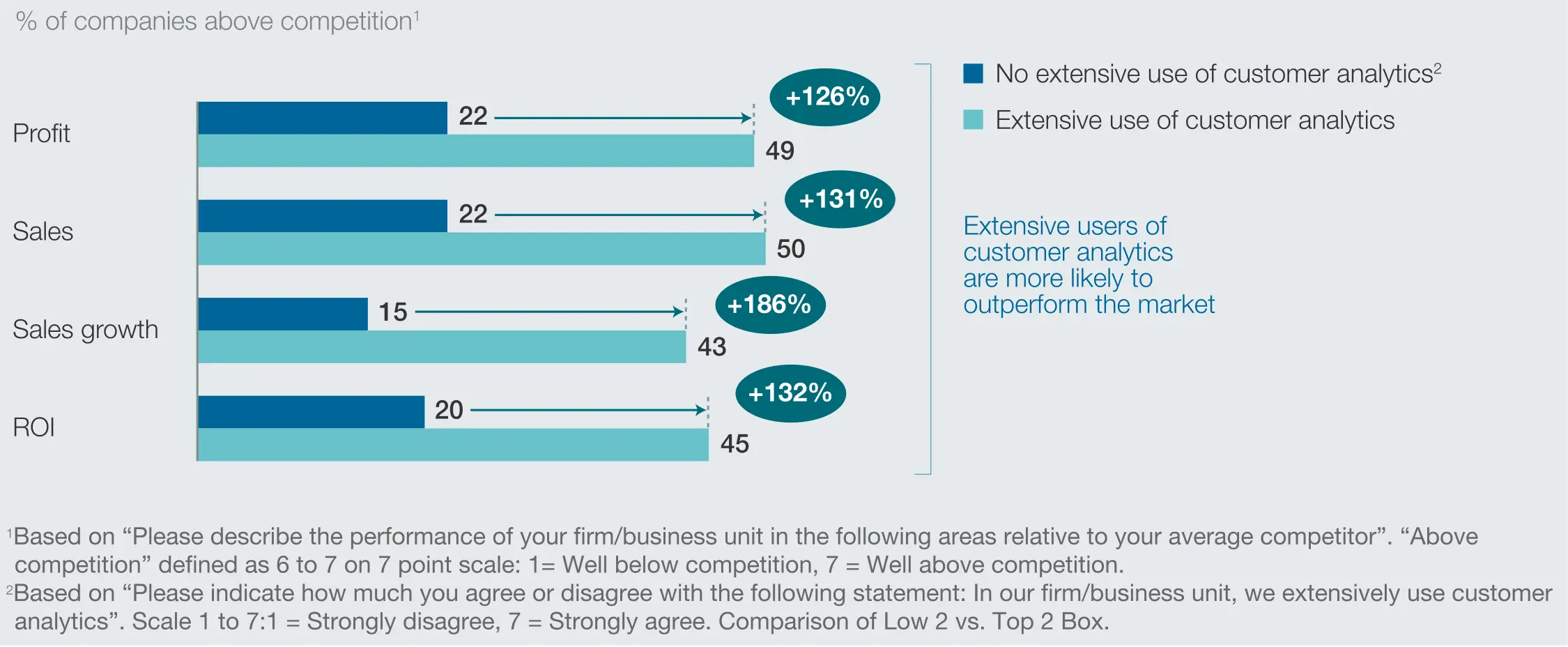

Just think of this. McKinsey has found that companies heavily using customer analytics are 23 times more likely to outperform their competitors in terms of customer acquisition than non-intensive users. Moreover, they outpace their rivals in customer loyalty 9 times. Saying nothing about ROI that is 2.6 times higher compared to organizations that don’t rely on customer data.

At its core, news represents the pulse of society. Through news scraping, you can learn more about customer needs shaped by societal changes, technological innovations, and global events.

On the other side, news articles often come with comment sections. They are a great place to study direct consumer feedback, critiques, and suggestions.

Risks & limitations of web mining articles

While there’s immense value to be found in news data collection, you should be well aware of some of the hurdles that can pop up along the way.

- Legal concerns. Many websites have strict guidelines about data extraction. Bypass these, and you might land yourself in some hot water legally.

- Dynamic website structures. When a site undergoes design changes or updates its structure, the scraping tools you’re using might break or retrieve incorrect data. So, you should constantly maintain and update scraping scripts.

- Anti-scraping mechanisms. Many news websites deploy anti-scraping tools to block or mislead web crawlers. These measures can range from CAPTCHAs to IP bans.

- Accuracy & reliability. Every piece of news isn’t gospel truth. There’s fake news, biases, and sometimes just plain errors. You won’t do without data cleansing and verification activities to get the utmost results.

- Data overload. Sometimes there can be “too much of a good thing.” With a deluge of data at hand, you can find yourself stuck at the analysis stage, struggling to pinpoint key insights, or draining more resources than you expected.

Is extracting articles from web pages legal?

As a starting point, web scraping, in its essence, is not illegal. Automated tools emulate what a human user does to scrape website articles — only at a faster pace. However, the devil lies in the details, and there are circumstances where scraping can lead to legal issues.

Many websites have a ToS or user agreement that explicitly outlines what users can and cannot do. If this agreement prohibits automated data extraction, then scraping that site would be in breach of its terms.

In some regions, scraping personal data without consent may lead to substantial penalties. In Europe, scraping activities are regulated by the General Data Protection Regulation (GDPR). The USA has the Computer Fraud and Abuse Act (CFAA) and California Consumer Privacy Act (CCPA) in effect. Always be wary of the nature of data being extracted and its alignment with privacy laws.

How to scrape news articles: detailed guide

1.Prepare for scraping news

Before you dive in, pinpoint exactly what you’re trying to achieve.

- Are you looking for articles from the past month, year, or perhaps a specific day? Determine a time range to collect data relevant to current trends or historical analysis.

- Perhaps you’re focusing on a particular subject matter, like technology advancements, political upheavals, or global health concerns. Clearly define your topic of interest to ensure your bot filters out unrelated articles and zeroes in on pertinent content.

- Are you targeting global news giants like BBC or CNN, or are you more inclined towards local news outlets? Maybe niche publications in specific industries? Determine the sources in advance for better scraping outcomes.

💡 Check the credibility and relevance of the websites you plan to scrape. Trusted outlets often have rigorous editorial standards, so you avoid the risk of gathering misleading information.

2.Select the proper article scrape method

The tool you select for data harvesting should be in sync with your objectives, technical proficiency, and the volume of data you seek. Let’s break down the main methods to help you find the most suitable approach.

What is it?

Best for

Drawbacks

Manual

Involves manually visiting each web page and copying the required information.

Small-scale projects where the volume of data is limited.

Highly time-consuming and not feasible for larger, more complex data extraction needs.

API

Provided by websites and allow structured access to their data.

Those looking for reliable, consistent, and updated information without the need to continuously scrape.

Not all websites offer APIs, and those that do might have limitations regarding the amount or type of data you can access.

Software

Tools designed specifically to automate the process of web scraping.

Businesses in need of regular data extraction but wish to retain control of the scraping process.

Some learning curve might be involved, especially if the software requires knowledge of coding.

On the other hand, you’ll need to decide whether to handle scraping on your own or outsource the managed mining articles service from third-party providers.

In-house news scraping is a good choice if you have a dedicated team within your organization to handle all web scraping needs. It works best for large corporations or research entities where data extraction is a continuous and vital process. Though, you may find it resource-intensive. You’ll need to hire, train, and provide the appropriate infrastructure.

Outsourcing scraping is a common pick for those businesses that want high-quality data without the hassles of direct oversight or the intricacies of the scraping process. However, you may not be willing to depend on external entities for data collection.

3.Build a respectful bot

Before you proceed with extracting articles from blogger sites, you’ll need to select a scraping tool. You may pick among simple browser extensions or more advanced platforms — it all depends on your needs, tech skills, and scraping objectives.

- As you set up the scraper, adhere to robots.txt. Check what parts of the website you can access to respect the boundaries site owners set.

- Mind the rate. As you send numerous requests, you overload servers, hamper website performance, or even crash the site. So, space out your data requests to prevent unintentionally hampering the regular functioning of the target website.

- If your bot needs to visit a site multiple times, consider caching the results. This minimizes the number of requests to a website, so it only collects what’s essential, avoiding unnecessary strain on the website’s resources.

- Website’s structure, layout, and even the way they serve content can change. Make sure your bot is adaptive, recognizing changes, and adjusting its scraping techniques accordingly.

- Equip your bot to handle errors. If it encounters a page that’s hard to scrape or gets a response indicating it should not proceed, it should be able to move on without causing disruptions.

4.Structure & store data

Once your bot has collected information from the news sources, you should proceed with transforming raw information into a structured format and store it in a secure place.

The first step for you to take would be to clean the collected data. It means filtering out extraneous or irrelevant content. Also, ensure you rectify any inaccuracies and fill in any missing data.

Data extracted from websites often come in a varied mix of formats. So, think about whether you’ll transform raw information into JSON, CSV, XML, or another data format.

- Choose the right storage solution. You may select among:

- Relational databases (SQL) — best for structured data with clearly defined types and relationships.

- NoSQL databases (MongoDB or Cassandra) — perfect for varied data structures or data that may evolve over time.

- Cloud (AWS S3, Google Cloud Storage, or Azure Blob Storage) — especially useful if your data needs are expansive and grow dynamically.

5.Post-scraping maintenance & analysis

News evolves rapidly. So, consider periodic re-scraping to keep your data fresh and relevant. Implement mechanisms that detect changes or updates in source websites and trigger re-scraping processes. With this automation, you’ll reduce manual oversight and ensure timely data renewal.

Leverage tools like Tableau, Power BI, or Google Data Studio to visualize and interpret your data.

Additionally, ensure that apart from regular backups, you have periodic deep backups to encapsulate larger sets of data.

Take the next step

If you’re certain that news scraping is a solution your business needs to thrive, Nannostomus is here for you. We’re equipped with cutting-edge scraping solutions and a deep understanding of the data landscape to ensure you have the highest return on your investment from scraping article titles.

Whether you’re considering our software solution or contemplating managed scraping services, you’re partnering with a team deeply committed to transforming the way you perceive and utilize data.

Contact us today to get a free quote for your project.