Did you know that around 252,000 websites are created every day? That’s right. 2.5 quintillion bytes of new data appear on the internet each day.

So, you’re probably wondering, “How can I tap into this wealth of information?” Great question. And the answer is: start with understanding the options for extracting website data. And that’s exactly what this article will help you with.

How businesses use data extraction from websites

The need for large amounts of data is higher than ever. That is why many companies automate extracting data from the web. Well-known companies are among them:

- OpenAI for large-scale website data collection to train AI models.

- Google for powering their search engine for website ranking. Also, they scrape the internet for Google Maps and Google News.

- IBM for fueling their AI projects.

- Expedia for gathering data on hotel prices, flight details, and package deals to offer competitive pricing and options to their customers.

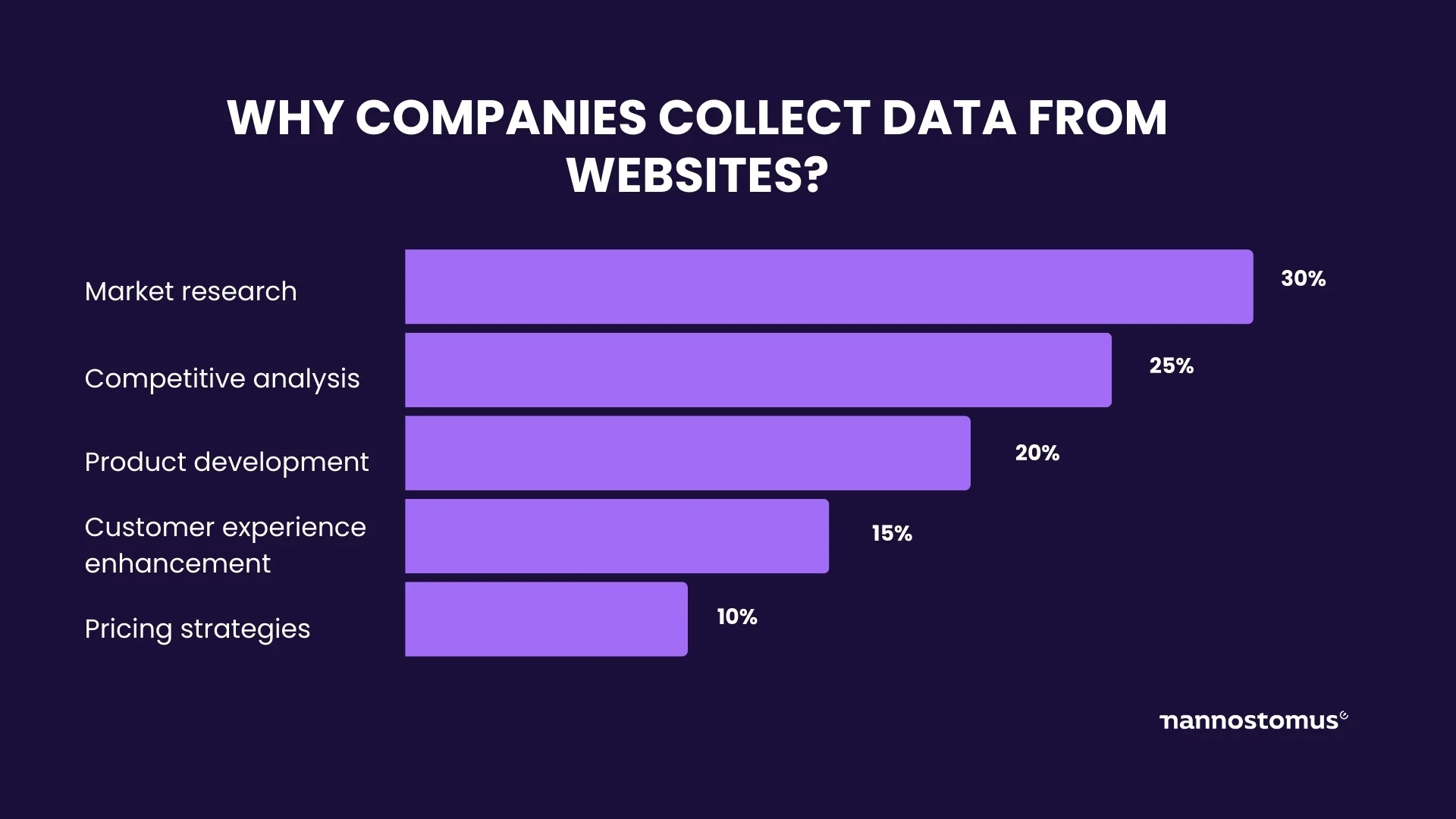

But what are the primary reasons companies retrieve data from websites? Check out the graphs.

Different ways of extracting data from websites

There’s more than one way to pull data from various websites. You can do it manually. With code. Or with low/no code. Which is the best method for you? Well, it depends on various factors:

- Level of technical expertise

- Data volume

- Data complexity

- Data collection frequency

- Budget

- Time

Next, we’ll explore each method—manual, with code, and low/no code—to give you a clear picture of your options.

How to gather data from websites manually

You visit the website, spot the data you need—text, images, tables, you name it. Then copy and paste it into your document or spreadsheet. It’s simple: see, select, copy, and paste.

When does this method work best for getting data from a website?

- Level of technical expertise. Perfect for those who aren’t tech-savvy. If coding sounds like a foreign language, this is your go-to method.

- Data volume. Ideal for small amounts of data.

- Data complexity. Works well with simple data structures. If it’s easily visible and can be highlighted, you’re good to go.

- Data collection frequency. Best for one-time or infrequent tasks.

- Budget. Cost-effective since it’s free. All you need is your time and patience.

- Time. This method is time-intensive. So, will work for you if you’re not in a hurry.

| Pros: | Cons: |

|---|---|

|

|

How to data scrape a website with code

If you want to scale your data collection activities, consider web scraping. It’s an automated process where a program or script browses the web and pulls data. So, here you (or a programmer) write a script that sends requests to websites. These scripts mimic human browsing to import data from a website and then store it in the format you need.

To get the job done, a developer uses a web scraping tool. This software sends requests to the target website’s server. Much like how a web browser requests a page when you want to view it. Once the tool accesses the webpage, it parses the HTML, XML, or JSON content to extract the specific data. This could be text, links, images, or other types of data. The extracted data is then processed:

- Clean irrelevant or duplicate information

- Convert into a structured format (a spreadsheet or a database)

- Sometimes is even translated or reformatted.

When it comes to using code to pull data from website, you’ve got a few options: build an internal team or outsource a managed team.

Assemble an internal team to extract info from web pages

When you opt for an internal team, it will be in charge of writing and maintaining web scraping scripts. You’ll need a mix of skilled professionals—data scientists, developers (proficient in Python, C#, JavaScript, or other languages), and a project manager.

Also, your in-house team will set up the necessary infrastructure. They’ll set up servers or cloud services. Select the right tools and technologies to get the job done. Develop custom scripts tailored to target specific websites and data types. Make regular code updates to adapt to changes in web page structures. Manage databases or data lakes.

When it’s best to get data from another website with an in-house team:

- Level of technical expertise. High. You need a team with solid coding knowledge.

- Data volume. Large amounts of data.

- Data complexity. Suitable for complex data needs.

- Data collection frequency. Ideal for frequent, ongoing scraping tasks.

- Budget. Requires a significant budget for skilled staff and technology.

- Time. Time-effective in the long run, though initial setup may take time.

| Pros: | Cons: |

|---|---|

|

|

How to pull information from a website with a managed team

With an outsourced managed team for web scraping, you hire a specialized company. Usually, the company reps delve into your internal processes and start building a solution architecture immediately. However, it may take some time (from 2 to 3 weeks) to assemble a team.

Sure, the team’s composition is tailored to your project’s specific needs. But here’s a rule of thumb: you’ll need data scientists, web scraping experts, project managers, and a cloud architect. This team operates independently. The outsourced project manager communicates with your in-house product owner to ensure consistency and alignment with your objectives. The communication usually mirrors your company’s internal processes and tools.

The managed team develops a customized web scraping solution: create scripts, set up data processing and storage systems, and troubleshoot any issues.

When extracting information from websites with a managed team is beneficial:

- Level of technical expertise. Low. No need for your own tech team.

- Data volume. Well-suited for both large and small-scale projects.

- Data complexity. Can handle complex data requirements.

- Data collection frequency. Better for regular scraping.

- Budget. Varies, but generally requires a significant investment.

- Time. Saves time as the external team manages everything, but may take time to assemble a team.

| Pros: | Cons: |

|---|---|

|

|

💡 What's the difference between web scraping outsourcing and hiring a managed team? Go and check out this article for a complete answer.

How to extract data from a web page with low/no code

Not a fan of coding? No worries. You can still harvest web data without writing a single line of code. Or with a few lines.

Buy a ready dataset

Sometimes, the best shortcut is the one already made. So, as you buy a ready dataset, you get the data you need without the hassle of extracting website data yourself. It’s all there, neat and tidy, just waiting for you to dive in.

When it’s the best way to download data from website:

- Level of technical expertise. None required.

- Data volume. Good for both small and large volumes, as long as the dataset matches your needs.

- Data complexity. Best for when data complexity aligns with the pre-compiled dataset.

- Data collection frequency. Ideal for one-time needs or periodic updates.

- Budget. Varies.

- Time. Great for immediate needs.

| Pros: | Cons: |

|---|---|

|

|

Extract data from web pages using website-specific APIs

Website-specific APIs offer a neat, efficient, and legitimate way to access web data. That’s because website owners set them up to allow external programs to interact with their data in a structured and controlled manner.

The API works this way. You send a request to the API (using a URL with specific parameters). And in return, the API sends you the data you asked for. APIs provide data in a structured format (JSON or XML). So, it’ll be easier to handle and integrate into your systems. Another perk—some offer access to real-time data. But be aware of the limits on how many requests you can make. This is to prevent overloading the website’s servers.

When you may want to extract database from website using an API:

- Level of technical expertise. Basic to moderate (understanding API usage).

- Data volume. Ideal for varying data volumes, subject to API limits.

- Data complexity. Good for structured data provided by the API.

- Data collection frequency. Suitable for both one-time and regular updates.

- Budget. Often free or low-cost.

- Time. Requires some initial setup time.

| Pros: | Cons: |

|---|---|

|

|

Grab data from the website using browser extensions

Extracting a database from a website in just a few clicks? Yep, that’s what you can do with a web browser extension. You just add the tool to your browser—Chrome, Firefox, or any other—and you’re set to scrape. These extensions are usually user-friendly, so you really don’t need web scraping skills. All you have to do is navigate to the website from which you want to fetch data and select specific data elements. That simple.

But there’s a catch. The scope of data you can collect is limited by what the extension is programmed to do. Want sophisticated data? Think of a better way of getting it.

When to extract web data using a browser extension:

- Level of technical expertise. None to minimal.

- Data volume. Best for small to medium volumes of data.

- Data complexity. Suitable for simpler data structures.

- Data collection frequency. Good for occasional use.

- Budget. Usually free or low-cost.

- Time. Quick and easy for small-scale tasks.

| Pros: | Cons: |

|---|---|

|

|

Outsource extracting data from the web

Not keen on doing it yourself? Outsource it. This is the most traditional approach. And probably the least hands-on one.

The drill is the following. You select a web scraping company. Tell them what data you need and from what websites. Sign a contract. (Btw, Nannostomus also provides free data samples. Know what you get before you commit). And then comes the best part for you—the service provider works to get website data for you. This can be a one-time project. Or you can agree to get datasets within a specific timeframe. Data harvesting companies are always flexible in these terms.

When to outsource web page data extraction:

- Level of technical expertise. None required from your end.

- Data volume. Scalable, suitable for any volume.

- Data complexity. Great for complex data structures.

- Data collection frequency. Flexible, from one-off projects to continuous scraping.

- Budget. Can be a significant investment.

- Time. Depends on website’s complexity, but usually from 3 to 24 hours per website.

| Pros: | Cons: |

|---|---|

|

|

Conclusion

There are tons of data on websites. You may collect it through a meticulous manual method. Sophisticated script-based coding. Or user-friendly low/no-code tools. Just whatever feels light for your needs.