The journey to successful web scraping is often dotted with roadblocks. In particular, your scrapers might get blocked as you’re sending too many requests from a single machine. Or you can’t scale your data harvesting effort because of the website restrictions. In these scenarios, web scraping proxies come to save the day. They transform the often challenging task of web scraping into a smooth, efficient process. Proxies make sure your business never misses out on important information as you scrape the web. And in this article, we’ll explain how.

What proxies for web scraping are?

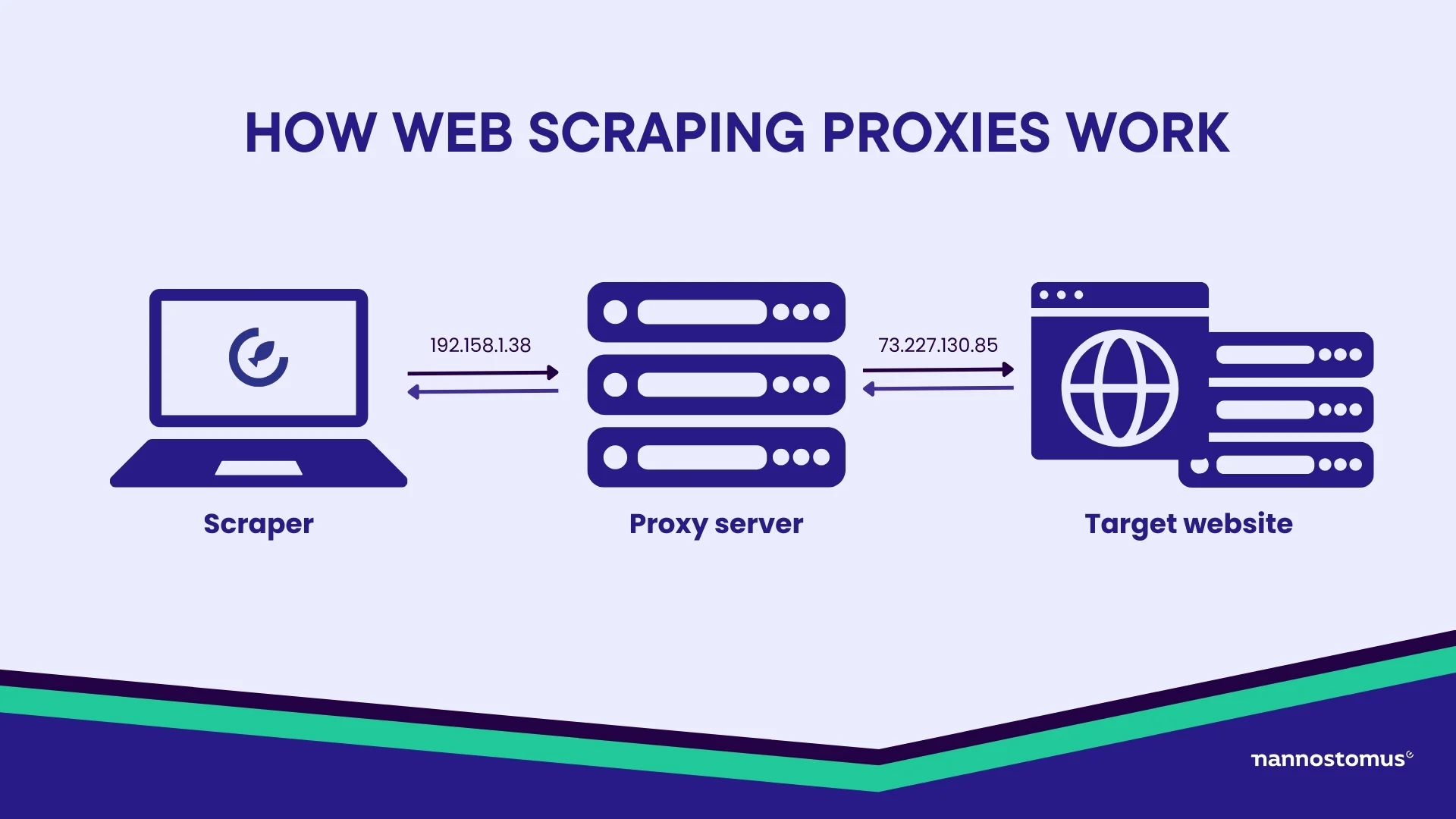

In its simplest form, a proxy server is a middleman that stands between you and the web. When you use proxies for web scraping, your requests to a website go through the proxy server first. The server then makes the request to the website on your behalf and brings back the information you need. In this process, your original IP address remains hidden, replaced by the proxy’s address.

So, using proxies to scrape information helps you operate undetected, navigate web scraping restrictions, and successfully gather data that the web offers.

How do scraping proxies work?

As you scrape data from the web, the crawler makes many requests from a server from one IP address. Many websites have protective measures in place like IP tracking systems, which may block your IP address and stop you from information collection.

When you use proxy services for scraping, a proxy server mediates between the end user (you) and the target website from which you’re extracting data. So, instead of making a direct connection, the request is first sent to the proxy server. This server then changes your IP address and forwards the request to the intended website on your behalf. The website sees the request coming from the proxy server’s IP address, not yours. Thus, it preserves your anonymity and reduces the likelihood of your scraping activity being detected or blocked.

ypes of proxies for scraping

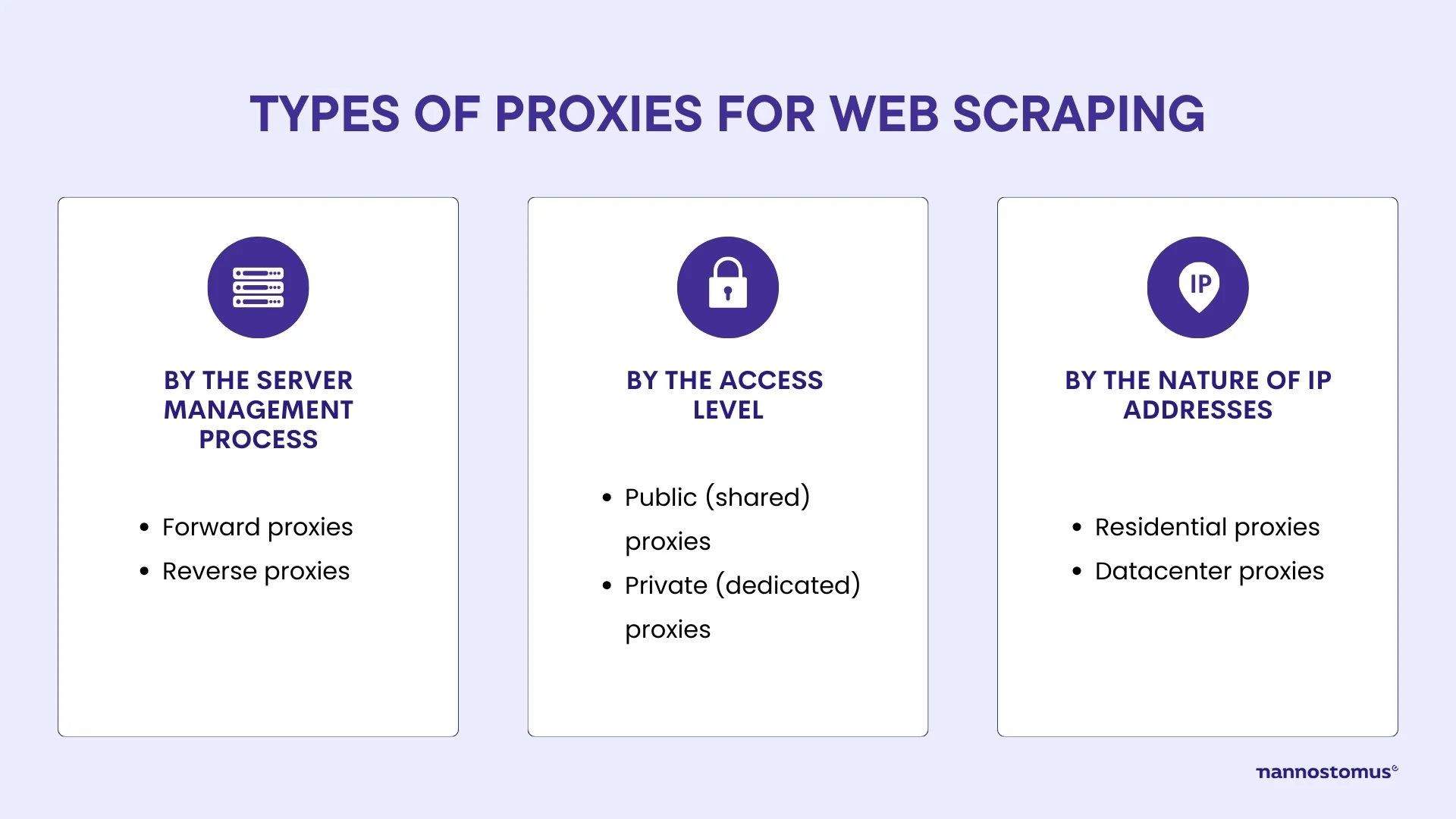

Different types of proxies are available on the market. Each can cater to your unique data extraction needs. But the best proxy for scraping meets your specific requirements, budget, and the level of complexity of your tasks. We’ve grouped the major types of proxies to help you better understand their nature and use.

As per the server management process, proxies can be:

- Forward proxies. Also commonly referred to as simply ‘proxies’, are the type we have been discussing so far. They sit closer to the client side, and all requests from the client to any server pass through this proxy. They are commonly used to help distribute load for large-scale data scraping tasks.

- Reverse proxies. Function at the other end of the communication line, near the web server side. A reverse proxy accepts requests from clients on the internet, forwards them to the appropriate web server, and then sends the server’s response back to the client. Reverse proxies are not typically used for web scraping activities. Their main role resides in server management and protection to enable a more robust and reliable internet service. Based on the level of access, there are:

- Public or shared proxies. Used by multiple users simultaneously. While they are cost-effective and sometimes even free, they may not offer the same level of performance, speed, or security as their counterparts.

- Private or dedicated proxies. These are exclusively used by a single user. While they come with a higher price tag, they offer higher performance, speed, and security. As of the nature of IP addresses, proxies can be broken down into:

- Residential proxies. These are associated with real physical locations and residential addresses provided by Internet Service Providers (ISPs) to homeowners. Thus, they look trustworthy and are less likely to be blocked by target websites. This makes residential proxies perfect for accessing geo-restricted content and for large-scale data scraping from websites with stringent security measures.

- Datacenter proxies. These proxies are not affiliated with ISPs. Instead, they originate from a secondary corporation, typically a cloud server provider. Although they lack the ‘human touch’ of a residential IP, data center proxies are faster and more affordable. They make a good choice for straightforward, large-scale data scraping tasks.

Why is it essential to use web scraping proxies?

Proxy servers have many uses, but full-scale web scraping would be impossible without it. Here are the key reasons why using proxy for scraping.

First, is the anonymity they provide. As we said earlier, proxies mask your IP address so that your scraping activities remain undetectable. Also, they remain uninterrupted since these proxies change the IP address frequently. So, if scalability is a concern for you, proxies will enable you to make numerous concurrent requests to speed up the data extraction process.

Beyond providing anonymity and scalability, scraping proxies also balance the load of requests. Websites often have mechanisms in place to limit the number of requests an IP address can make within a specific timeframe. If this limit is exceeded, the IP address could be temporarily or permanently blocked. Scraping proxies distribute requests across multiple IP addresses. This way, they prevent detection and ensure uninterrupted data extraction.

Some websites display different content or restrict access based on the visitor’s geographical location. As you use a proxy server located in a specific region, you can bypass these geo-restrictions and scrape data regardless of its geographic availability.

Conclusion

If you are tired of getting blocked while you fetch data online, the best proxies for web scraping come to the rescue. They will ensure uninterrupted web information extraction, even on a large scale.